On the road to the new material system

Not started yet.

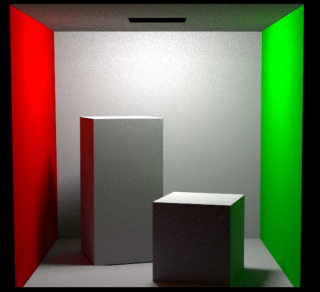

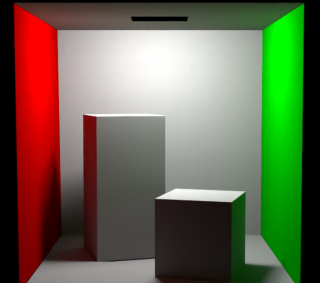

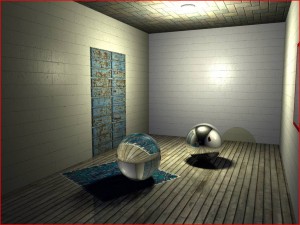

I decided that I didn’t want errors in my reflection/transmission code to mess with the new material system and that I had to first make sure that code is working correctly. So I copied/adapted the code I had in the raytracer into the path tracer and create a scene showing all 4 materials (emissive, diffuse, transmissive and reflective) in action. I already had those working a few years ago, I was sure it would have been straightforward.

FALSE!

Problems of all kinds emerged, and I had to spend some time tracking them down and fixing them. I’ve found bugs in how I was modulating the incident light, how I was calculating the intersections, problems in the “shape lights”, in the fresnel code et al. Not all of them have been fixed yet. For example, shape lights work as long as I use a square or a cube, but with more complex shapes (i.e. Suzanne from Blender) it looks like no light is emitted at all. From first investigation, every ray emitted is then “blocked” by a shadow test.

On a more positive note, now the output (at least for the simple scenes I’ve tested so far) really resembles what comes out from Cycles and I’ve also implemented a “true” sphere light to replace the previous one which was really fast and handy to use to model point lights, but still was a delta light. I don’t know how much this will improve things, but I already had a “shape light” which just “wrapped” a shape, I just needed to pass it a sphere instead than a mesh.

I hope to fix the shape lights in the weekend.